The First Type of Transformative AI?

Ask 'what will AI transform?', not 'when?'... and what choices do we have in that?

I recently contributed to a discussion of the first type of transformative AI with Owen Cotton-Barrat and Lizka Vaintrob. It’s part of a series those two (primarily, with some input from me and others) are working on, which asks, expanding on their agenda from last year:

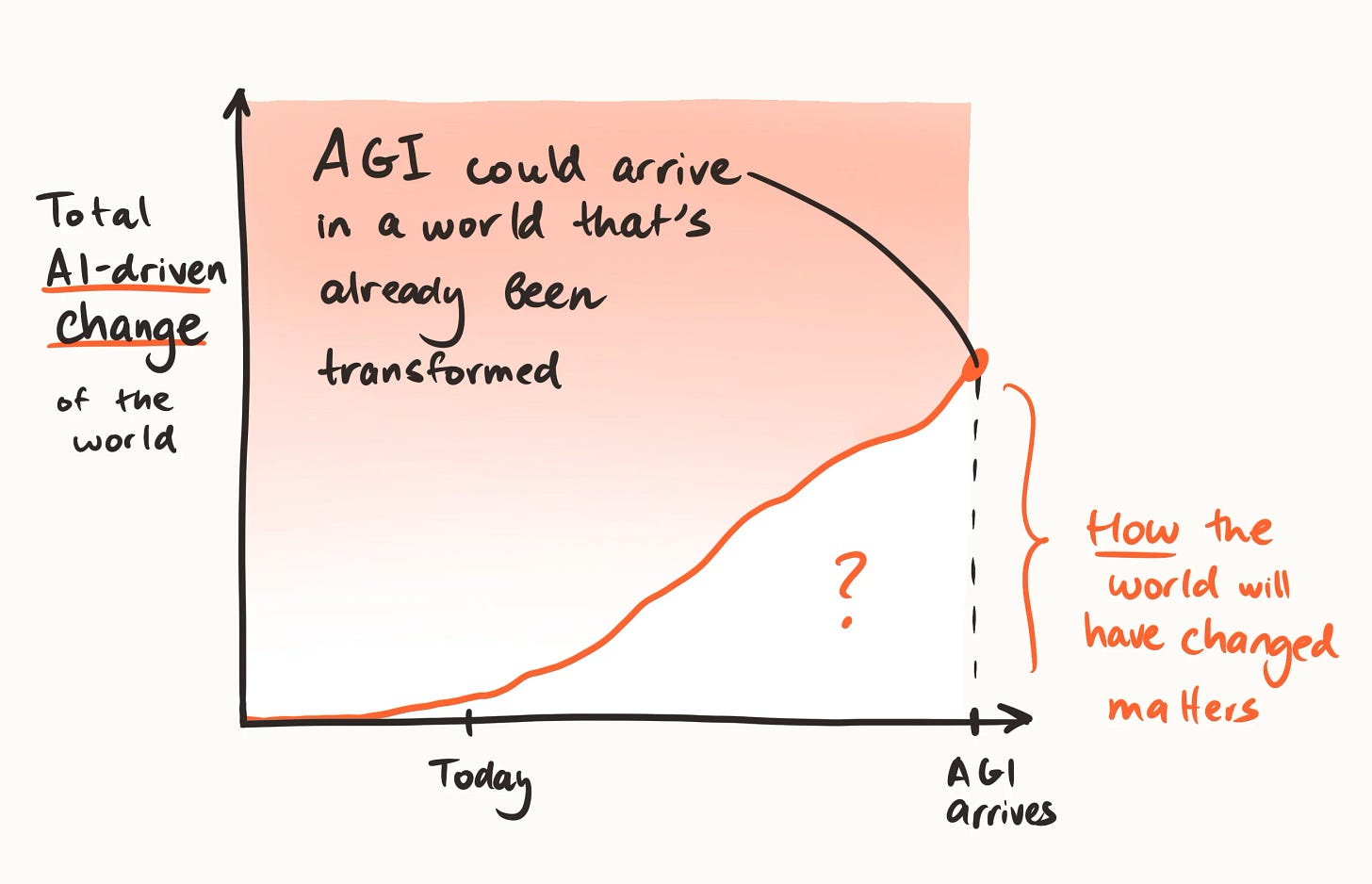

AI is not just one, big, singular thing. What are the ways we can bring forward the beneficial possibilities, while delaying or defending against the harmful ones?

As I repeatedly emphasise to anyone who’ll listen: it’s never been just a dichotomy between ‘yes, good, more AI please’ and ‘no, bad, less AI thank you’ — and it’s not even a case of just making sure ‘the AIs’ are good (though this helps). How the tools and technological building blocks at our disposal are integrated into applications, workflows, and use-cases, is just as important.

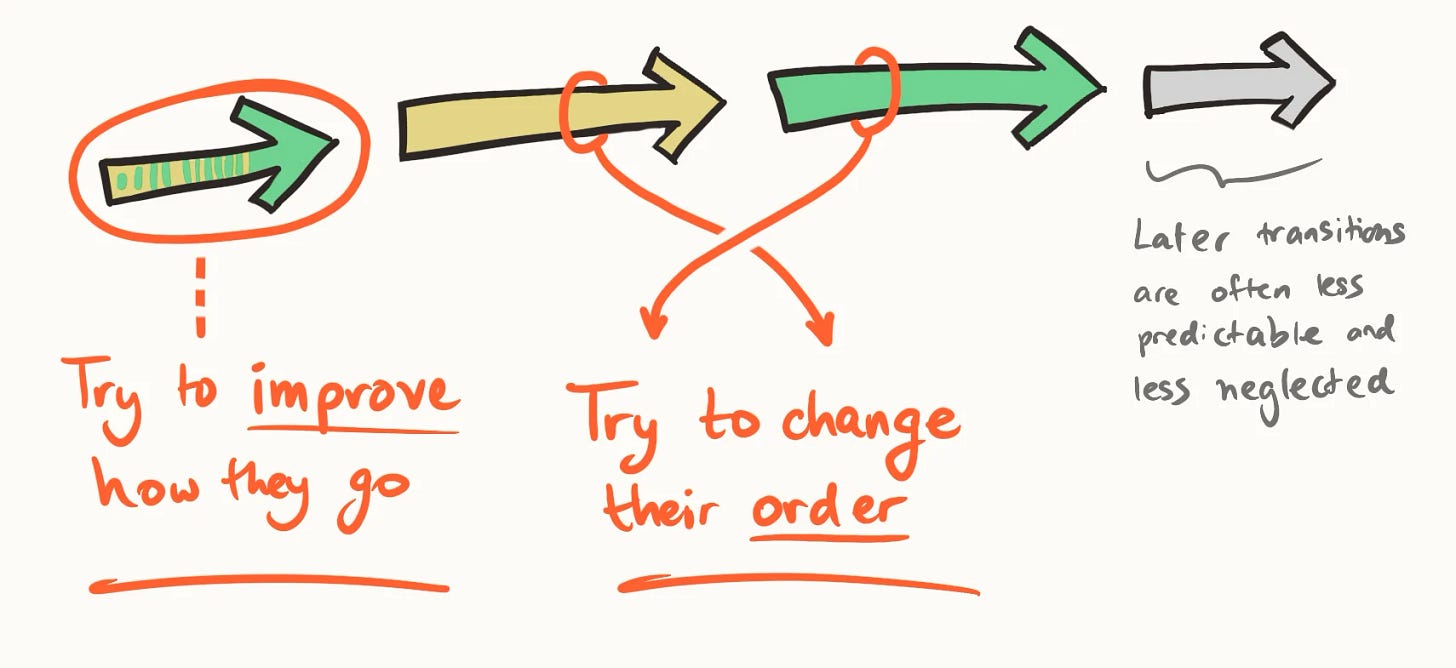

And the tools, products, and systems we could develop now shape the context for subsequent developments, including by:

equipping people to better predict and understand their options

enabling people to coordinate better around preferred possibilities (which might otherwise be difficult due to mismatched incentives or race dynamics)

giving the tools to defuse or defend against hazardous developments, or their precursors

For technologists, futurists, philanthropists, legislators, experts, and other members of society trying to make tech progress go well, paying attention to which effects happen, in what order — and what our options are for choosing wisely there — looks like a really promising, and neglected way of reducing large-scale risk and bringing about huge benefits.

You can read our few pages of fuller discussion for more of our thoughts on some scenarios we think are worth considering, including intelligence explosion, turbocharged economy, and epistemic uplift.