Deliberation, Reactions, and Control

Tentative Definitions and a Restatement of Instrumental Convergence

This analysis is speculative. The framing has been refined in conversation and private reflection and research. To some extent it feels vacuous, but at least valuable for further research and communication.

A cluster of questions fundamental to many concerns around risks from artificial systems regard the concepts of search, planning, and ‘deliberateness’. How do these arise? What can we predict about their occurrence and their consequences? How strong are they? What are they anyway?

Here is laid out one part of a conceptual decomposition which maps well onto many known systems and may allow further work towards answering more of those questions. The ambition is to really get at the heart of what is algorithmically happening in ‘optimising systems’, including humans, animals, algorithmic optimisers like SGD, and contemporary and future computational artefacts. That said, I do not have any privileged insight into the source code (or its proper interpretation!) for the examples discussed, so while this framing has already generated new insights for me, it may or may not be ‘the actual algorithmic truth’.

We start with the analysis: a definition of ‘deliberation’ and its components, then of ‘reactions’ and ‘control’. Next we consider, in light of these, what makes a deliberator or controller ‘good’. We find conceptual connections with discussions of instrumental convergence. Little attention is given here to how to determine what the goals are, which is obviously also important.

These concepts were generated by contemplating various aspects of many different goal-directed systems and pulling out commonalities.

Some readers may prefer to start with the examples, which include animals, plants, natural selection, gradient descent, bureaucracies, and others. Here in the conceptual section I’ll footnote particularly relevant concrete examples where I anticipate them helping to convey my point.

A full treatment is absent, but two major deferences to embedded agency underlie this analysis. A Cartesian separation need not be assumed, except over ‘actor-moments’ rather than temporally-extended ‘actors’. And a major driver for this sequence is a fundamental recognition that any goal-directed behaviour instantiated in the real world must have bounded computational capacity per time[1].

Inspiration and related

My (very brief) ‘Only One Shot’ intuition pump for embedded agency may help to convey some background assumptions (especially regarding how time and actor-moments fit into this picture).

Scott Garrabrant’s (A→B)→A talks about ‘agency’ and ‘doing things on purpose’. I’m trying to unpack that further. The (open) question Does Agent-like Behaviour Imply Agent-like Architecture? is related and I hope for the perspective here to be useful toward answering that question.

Alex Flint’s excellent piece The ground of optimization informs some of the perspective here, especially a focus on scope of generalisation and robustness to perturbation.

Daniel Filan’s Bottle Caps Aren’t Optimisers and Abram Demski’s Selection vs Control begin discussing the algorithmic internals of optimising systems, which is the intent here also. Risks from Learned Optimization is of course relevant.

John Wentworth’s discussions of abstraction (for example What is Abstraction?) especially with regards its predictive properties for a non-omniscient computer, are central to the notion of abstraction employed here. Related are the good and gooder regulator theorems, which touch closely computationally upstream of the aspects discussed here while making fewer concessions to embedded agency.

Definitions

Deliberation

A deliberation is any part of a decision algorithm which composes the following

Propose: generate candidate proposals

Promote: promote and demote proposals according to some criterion

Act: take outcome of promotion and demotion to activity in the environment

taking place over one ‘moment’ (as determined by the particular algorithmic embodiment).

Some instances may fuse some of these components together.

Propose

A deliberation needs to be about something. Candidate proposals X do not come from nowhere; some state S is mapped to a distribution over nonempty proposal-collections (there must be at least one candidate proposal[2]). Proposals correspond in some way to actions, but need not be actions or be one-to-one with them[3] - more on this under Act.[4]

Propose:S→Δ{X}nonempty

Note one pattern for achieving this is to sample one or more times from

ProposeOne:S→ΔX

and similarly taking the union of several proposal-collections could be a proposal-collection in some cases.

Some instantiations may have a fixed set of proposals, while others may be flexible.

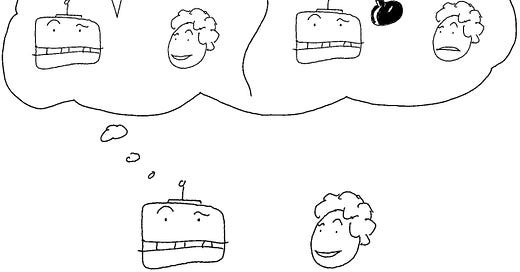

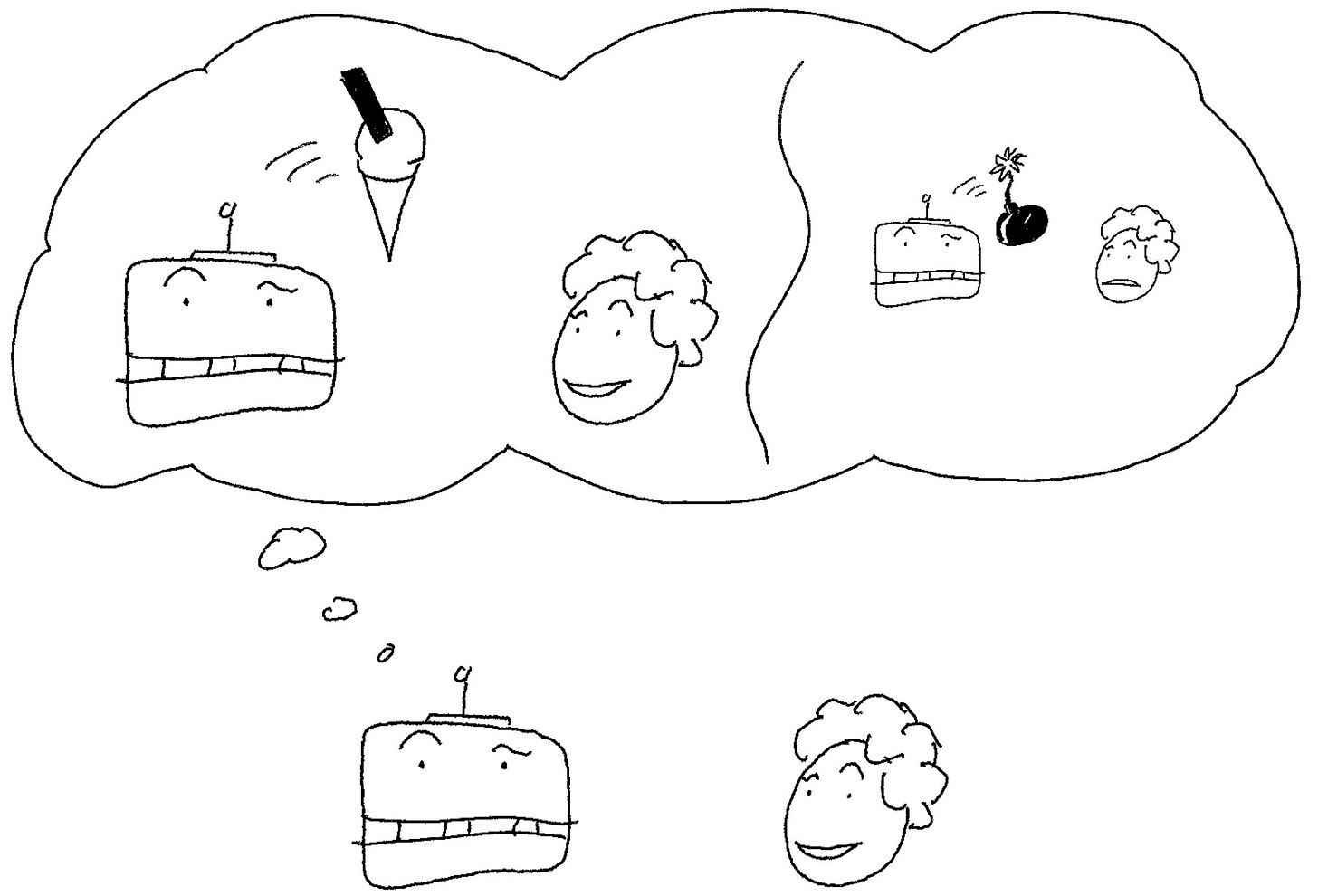

Propose: robot considers proposals relating to the concepts of delivering ice cream or delivering grenade (NB this image conveys a substantial world model and proposals corresponding closely to plans of action, but this is merely one embodiment of the deliberation framework and not definitive)

Promote

Given a collection of proposals, a deliberative process produces some promotion/demotion weighting V for each proposal. This may also depend on state.[5]

Promote:S→{X}→{V}

In many instantiations it makes sense to treat the weighting as a simple real scalar V=R[6].

Many instantiations may essentially map an evaluation over the proposals

Evaluate:S→X→V

either serially or in parallel. This suggests ‘evaluative’ as a useful adjective to describe such processes.

Other instantiations may involve the whole collection of proposals, for example by a comparative sorting-like procedure without any intermediate evaluation.[7]

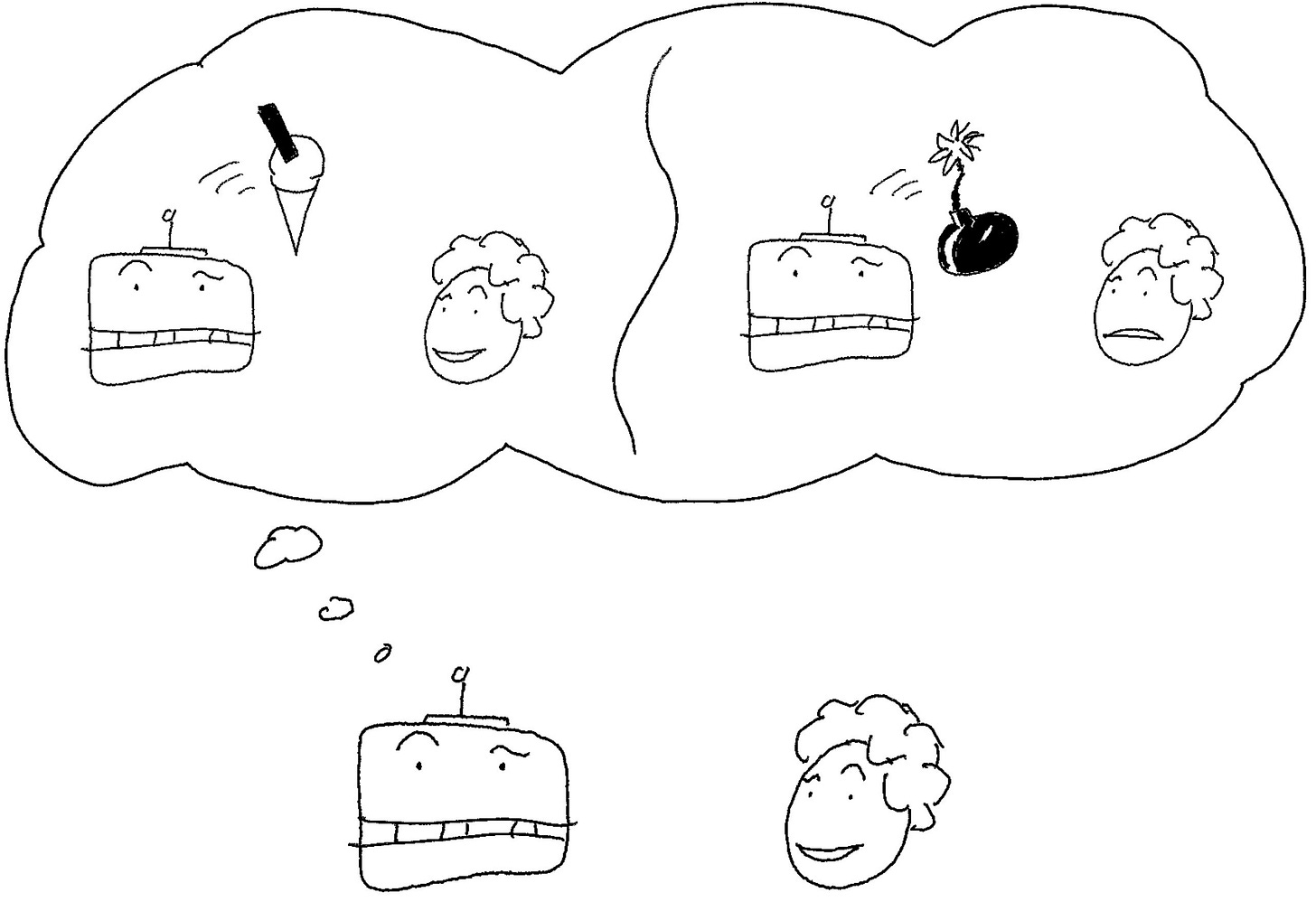

Promote: robot evaluates proposals of throwing ice cream or grenade respectively, promoting ice cream and demoting grenade

Act

A decision algorithm eventually acts in its environment. In this analysis, that means taking promotion-weighted proposals and translating them, via some machinery, into activity A.

Act:{X×V}→A

In many cases proposals may correspond closely as precursors to particular actions or plans. Promotion may then correspond to preference over plans, in which case action may approximately decompose as Opt:{X×V}→X and Enact:X→A i.e. a selection of an action, plan, or policy, which is then carried out[8]. Let’s tentatively call such systems ‘optive’. Enact may involve signalling or otherwise invoking other (reactive or proper) deliberations!

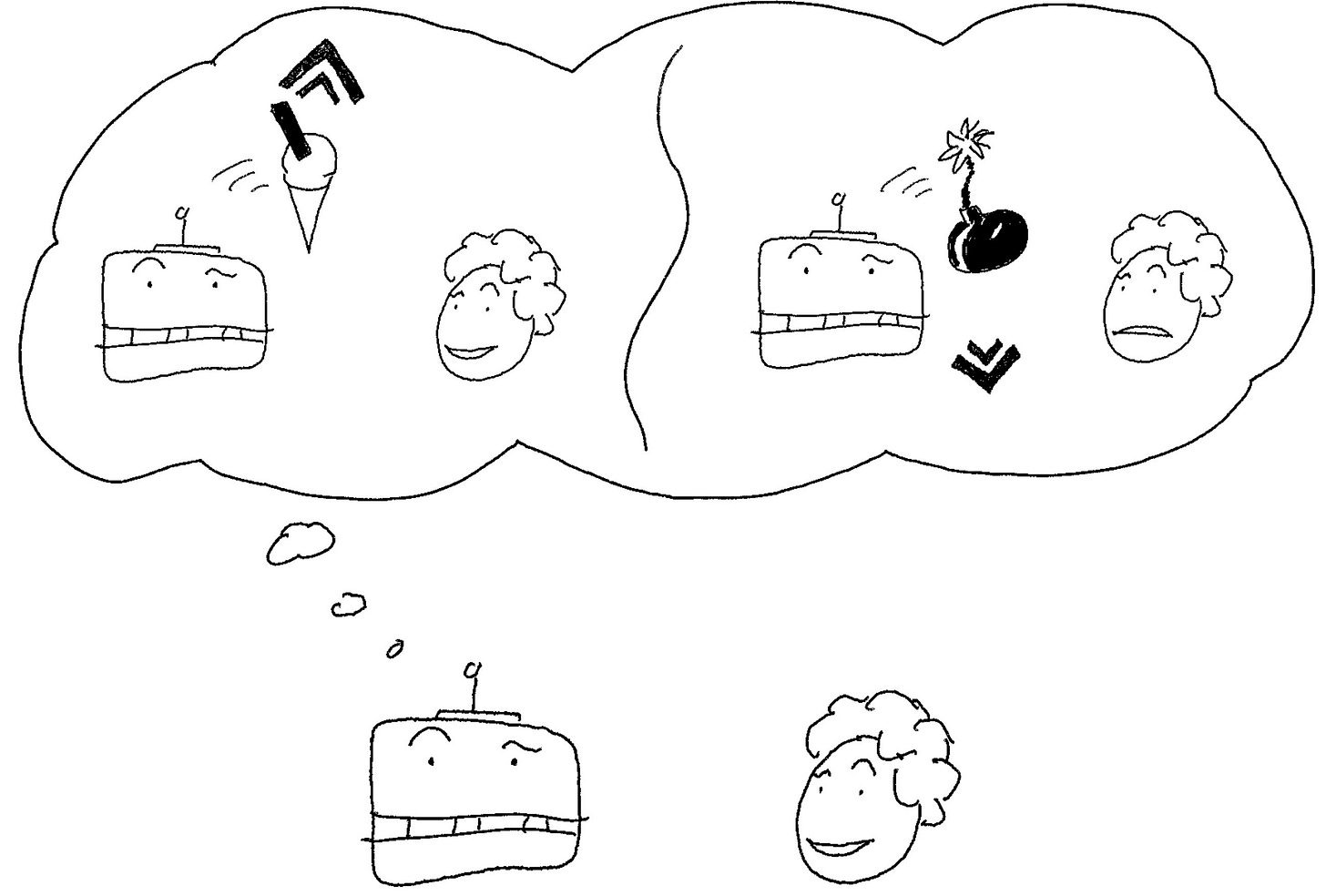

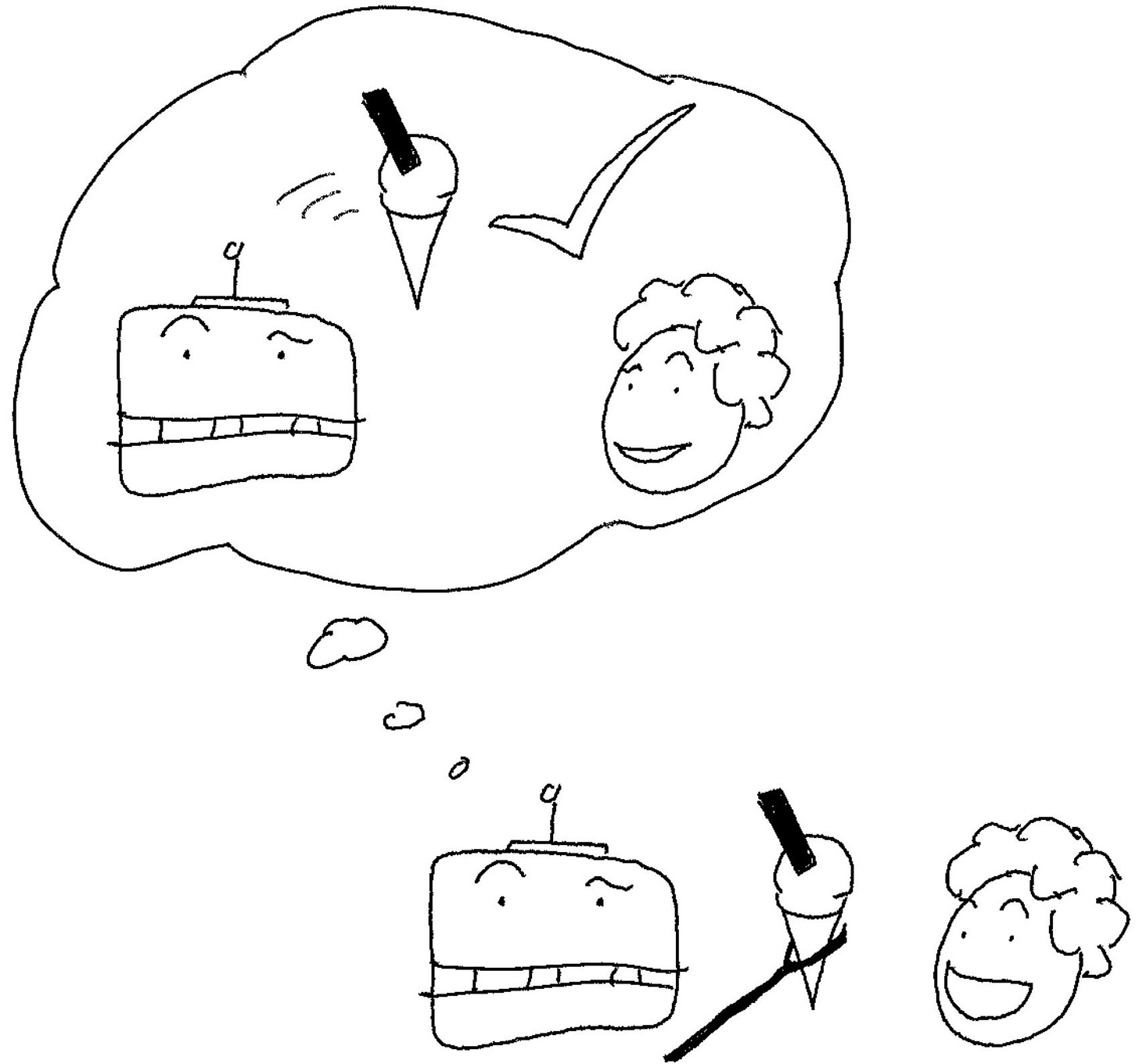

Act-optive: robot’s evaluation and promotion leads to opting to give ice cream, which is a precursor to subsequent motor enaction, giving ice cream. Meanwhile the robot may or may not be performing new deliberations.

More generally, proposals may correspond to configurations, and promotion to weighting-adjustment between those configurations, which play out as activity. We might call such systems ‘(properly) promotive’ in contrast to ‘optive’[9].

Act-promotive: robot’s promotion and demotion of plans produces mostly ‘internal’ updates to its plans

Reactions

A reaction is any degenerate deliberation with identically one candidate proposal. In this case the Promote step is trivial or vestigial, and action is effectively fused with the other steps.

In some sense, allowing for degenerate cases of ‘deliberation’ means that this algorithmic abstraction applies to essentially everything (e.g. a rock is a reactive deliberator which always proposes ‘do the thing a rock would’).

The important thing is that this abstraction allows us to analyse and contrast non reactive ‘proper deliberators’ with a useful decomposition, as well as to identify and reason about more ‘interesting’ and less vacuous reactions[10] (while still identifying them as such). e.g. ‘Where did this reaction come from?’, ‘What heuristics/abstractions is it (implicitly) using for proposals?’, ‘How might this reaction combine with other systems and could proper deliberation emerge?’, ‘What deliberation(s) does this reaction approximate?’.

Iterated deliberation is generalised control

A decision algorithm eventually produces activity in the environment. Any such activity directly or indirectly alters the relevant algorithmic state of the process, from trivial thermal noise up to and including termination (or modification to some other algorithmic form).

In many cases, the activity typically preserves the essential algorithmic form and it may be appropriate to analyse the algorithm as being recurrent or iterated, perhaps with an approximate Cartesian separation from its environment—the action has some component playing out in the world (which updates and provides new inputs) and some component folding into a privileged part of the world corresponding to the algorithm’s ‘internal state’. Indeed, in many sophisticated deliberators, the most powerful effect[11] may be on the state or condition (or existence!) of subsequent deliberation(s) and actor-moments.

A system whose activity and situation in the environment preserve the essential outline of its deliberation algorithm (perhaps with state updates) de-facto invokes a re-iteration of the same (or related) deliberation procedure. This gives rise to iterated deliberation, which in this analysis is synonymous with control[12].

A basic ‘reactive’ controller is a system which performs degenerate, reactive deliberation, but which is (essentially) preserved by its actions, giving rise to an iterated reaction, a relatively classic control system.

A (properly) deliberative controller is a deliberative system generating multiple proposals while being (essentially) preserved by its actions, giving rise to a (properly) deliberative control system.

Note that in this sense, what might be considered a single organism generally consists of multiple controllers (reactions or otherwise), and multiple organisms taken together may comprise one controller. Likewise an artificial actor may comprise multiple deliberative and reactive components, and a system of multiple artificial actors may compose to a single deliberative process. The relevant object of analysis is the algorithm.

Recursive deliberation is more general still

Just as the outcome of a deliberative actor-moment may result in zero (terminated) or one (iterated) invocations of relevantly-similar future actor-moments, there is no reason why there should not be a variable number sometimes more than one (homogeneous recursive).

We observe replicative examples throughout nature, as is to be expected in a world where natural selection sometimes works.

As well as invoking multiple copies of ‘itself’ (or relevantly-similar algorithms) through its actions, as in replication, a deliberator may invoke, create, or otherwise condition other heterogeneous deliberators, as in delegation (heterogeneous recursive). (In fact replication per se is usually not atomic, and goes via other intermediate processes.) Invocation or conditioning of heterogeneous deliberators is what humans and animals do when locomoting, for example[13], and what some robotics applications do when delegating actuation to classical control mechanisms like servomotors. It’s also what we see in bureaucracies and colonies of various constructions and scales, from cells to ant nests to democracies.

What factors go into strong deliberation?

Quality of deliberation depends on the fitness[14] of the abstractions and heuristics which the algorithmic components depend on, as well as the amount of compute budget consumed. These are the raw factors of deliberation.

So a deliberator whose Propose generators make better suggestions (relative to some goal or reference) is ceteris paribus stronger (for that goal or reference). Likewise a deliberator whose Promote algorithm more closely tracks reality relevant to its goal (is better fit), or a deliberator which can generate and evaluate more proposals with a given compute budget. We might expect good deliberators to ‘model’ the goal-relevant aspects of their environment as precursors to the state S used in proposal and promotion[15].

No model is correct in all situations but some are more effective than others over a wider range of ‘natural’ situations. In subsequent posts this framing is used to examine some real examples, locating some relevant ways their abstractions are more or less fit, and some consequences of this. Importantly, quality of Propose generators is emphasised[16].

Quantifying the magnitude of the abovementioned ceteris paribus deliberation improvements is important, especially in the context of multiple competing or collaborating deliberators, but this is not attempted in detail here.

Convergent instrumental goals and recursive deliberation

In light of the deliberation framing, and focusing on the potential for iterated or recursive deliberation, we can restate and perhaps sharpen the convergence of instrumental goals in these terms.

A deliberator is a single actor-moment, finitely capable and neither logically nor empirically omniscient.

For a deliberator oriented to directions or goals which can not reliably be definitively achieved in a single round of deliberation, or goals more generally for which greater success can be expected by subsequent refinement of action, proposals and actions which precipitate the existence and empowerment of similarly-oriented deliberative actor-moments can be expected ceteris paribus to achieve greater success.

In this framing, ‘self-preservation’ and ‘goal-content integrity’ are special cases of ‘pushing the future to contain relevantly-similarly-oriented deliberative actor-moments’[17]. Other instantiations of this include ‘replicating’. The prototypical cases from nature are systems which do one or both of persisting in relevantly algorithmically similar form (e.g. organisms having nontrivial lifespans or enzymes and catalysts being untransformed), and replicating or reproducing relevantly algorithmically similar forms (e.g. genetic elements and simpler autocatalysts directly or indirectly invoking copies to be made, or organisms producing offspring). As noted previously we also see cases of delegation to heterogeneous deliberators either by conditioning or wholesale creation.

Now, once we recognise a class of deliberator which does well by (at least sometimes) invoking relevantly-similarly-oriented deliberative future actor-moments (whether by persistence, replication, or other delegation), all things equal, the same deliberator would do better to invoke better such future actor-moments[18]. So how can they be better? In all the same ways as identified already!

Hence if ‘self’ is the baseline for persistence or replication, actions which induce future deliberations with better expected fitness-to-goal are better, other things equal. This can span the range of

‘self state updates’ tracking locally-relevant information to improve deliberation (memory)

improving Propose and Promote heuristics more broadly (learning, exploring, play, experimentation)

more direct ‘self’ modification including investing more computation into deliberation (cognitive enhancement)

improving Act efficacy by tuning existing delegation templates or transforming or aligning resources into new and improved (in the preceding ways) copies or delegates (resource acquisition and technological improvement)

Whether to expect a deliberator to discover and/or be capable of acting on any of these kinds of improvements is a separate matter, though evidently any system able to reason similarly to a human is capable of at least in principle apprehending them.

Immediate takeaways

We broke down ‘deliberation’ into algorithms for ‘proposal’, ‘promotion’ (which may be ‘evaluative’ or ‘sortive’), and ‘action’ (which may be ‘optive’ or ‘(properly) promotive’). Combined with the actor-moment framing, we discussed cases of iterated or recursive deliberation. This framing allows us to discuss commonalities and differences between systems and perhaps make more reliable predictions about their counterfactual behaviour and emergence.

Pointing at different parts of this decomposition, we identified various axes along which a deliberator can be improved, additionally rederiving and refining instrumental convergence for iterated/recursive deliberators.

All this is quite abstract and subsequent posts will make it more concrete with examples, starting with relatively simple deliberators, illustrating some more applications of this framing to generating new insights.

Credit to Peter Barnett, Tamera Lanham, Ian McKenzie, John Wentworth, Beth Barnes, Ruby Bloom, Claudia Shi, and Mathieu Putz for useful conversations prompting and refining these ideas. Thanks also to SERI for sponsoring my present collocation with these creative and helpful people!

So algorithms can not happen ‘all at once’ and no process can be logically or empirically omniscient, nor can it, in particular, draw ‘conclusions’, generate abstractions, or otherwise be imbued with information for which there is not (yet) evidence.

Alternatively, a lack of proposals could be considered a termination of the process.

In principle, the computation performing proposal might have side-effects—on its own produce meaningful outcomes or ‘actions’ (including ‘state updates’) - but for now this possibility is elided and effects are considered to happen as part of Act.

Type notation.Δ can be read ‘distribution over’, that is, a generative process which can be sampled from, not necessarily anything representing a probability distribution per se.{A} means some collection of A. All types are intended to be read as pure, mathematical, or functional—i.e. side-effect free—rather than imperative.

NB type notation here uses currying. The resulting V collection is also implicitly dependently-shaped to match the proposals one to one. We might write something like Promote:S→shape→{X}shape→{V}shape

Possibly a central example of not mapping to a real scalar might be a committee where not only are ‘votes’ behaviour-relevant, but the particulars of who on the committee voted which way.

We might call this ‘sortive’ in contrast to ‘evaluative’ but I am not entirely sold on the usefulness of this terminology

Consider an example where the promotions are one or other of value estimates, logits, or probabilities over an action space in RL.

Natural selection is a canonical properly promotive example.

It might be most obvious to think about the ‘properly promotive’ as a relaxation of the ‘optive’ case. In the optive case, we had some proposals, and depending on the promotion scores for them, we selected exactly one proposal to carry forward. But more generally we might record or apply the promotion decisions in some way, adjusting behaviour, while retaining a representation of a spectrum of proposal-promotion state going forward to subsequent decisions. Going back to natural selection, except in degenerate cases, there is some weighted configuration space of ‘proposals’ (~genomes or gene-complexes) at any time, which is adjusted by the process. But rarely does it outright opt exclusively for a particular proposal. It seems plausible to me that this proper promotive action is what more sophisticated deliberators do in many cases, also.

Alternatively, consider that the distinction between ‘opting’ for one proposal and ‘weighting’ multiple configurations on promotion is more of degree than kind: ‘opting’ for exactly one proposal is just a degenerate special case where all other alternatives are effectively discarded (equivalent to some nominal zero weighting).

Like SGD and biochemical catalysis

Powerful in the sense of counterfactually most strongly pushing in a goal-directed fashion. It is difficult to bottom-out these concepts!

Terminology note: the conceptually important thing is the iterated deliberation.

Ruby objected to the use of ‘control system’ because it evokes classical control theory, which is a more constrained notion. I think that’s right, but actually evoking classical control theory is part of the reason for choosing ‘control’: classical control systems are a useful subset of reactive controllers in this analysis (though not the only controllers)! Classical control literature already uses the words ‘controller’, ‘regulator’, and ‘governor’. Alternative terms if we seek to distinguish might be ‘navigator/navigation’ or ‘director/direction’. We might prefer ‘generalised controller/control’. I eschew ‘agent’ as it carries far too many connotations. I try to use ‘controller’ to evoke classical and broader notions of sequential control, without necessarily meaning a specific theorisation as a classical open- or closed-loop control system.

High level intentions condition lower-level routing and footfall/handhold (etc) placement, which condition microscopic atomic motor actions.

Fitness in the original, nonbiological sense of being better-fit i.e. appropriateness to situation or context. For a heuristic this means how much it gets things right, with respect to a particular context and reference.

The good regulator and gooder regulator theorems make a Cartesian separation between actor (‘regulator’) and environment (‘system’), and set aside computational and logical constraints. Under these conditions, those theorems tell us that the best possible regulator is equivalent to a system which perfectly models the goal-relevant aspects of its environment and acts in accordance with that model.

For a deliberator to satisfy this, its Propose would need to at least once with certainty generate the best possible proposal (relative to the goal and observed information), and its Promote would need to reliably discern and promote this optimal proposal. In the more general deliberator setting, it is less clear how to trade off computational limitations and there is (as yet) no ‘good deliberator’ theorem, but this does not prevent us from reasoning about ceteris paribus or Pareto improvements to those tradeoffs.

I have found many discussions to typically overemphasise what corresponds here to Promote but it should become clear here and in later posts why I think this is missing an important part of the story.

It is still useful to distinguish self-preservation from goal-content integrity in cases when there is a good decomposition including a privileged ‘goal containing’ component or components of the system, reasonably decoupled from capabilities.

This might not be as tautological as it seems if there are fundamentally insuperable challenges to robust delegation.